There have been repeated calls for “space” in many fields of social science (all links are behind paywalls, sorry):

- Demography: (Voss 2007)

- Sociology: (Gieryn 2000)

- Epidemiology: for an early critical review (Jacquez 2000)

- Geography: obviously geographers were into space before it was cool. A couple of pieces I like are Doreen Massey’s book, For Space and O’Sullivan (2006) for a review of GIS.

- Anthropology: the proceedings of a conference including a piece by Clifford Geertz, Senses of Place (1996). Though what I’m writing here has less to do with the space/place debate.

These are nice papers about what the authors think should be new research agendas, bu I think social sciences need to stop calling for space and start “playing” with space. Let me explain…

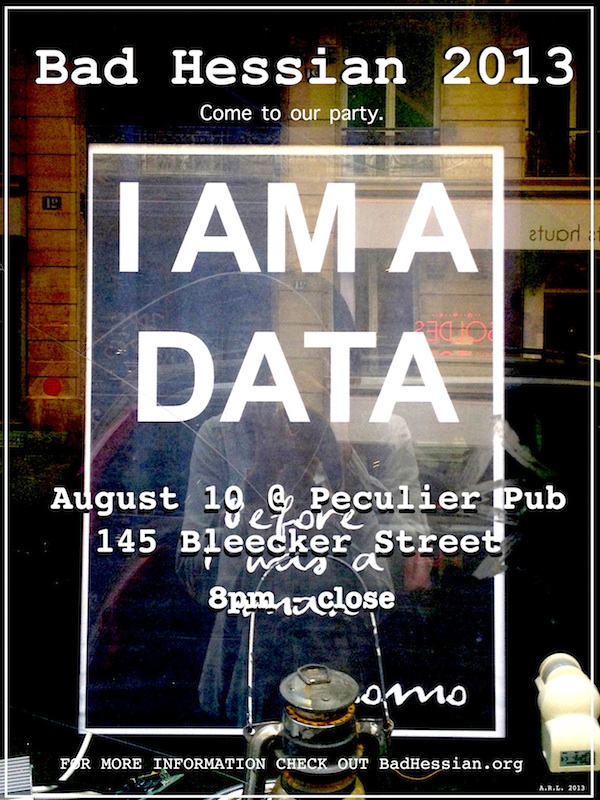

This idea started when fellow Bad Hessian, Alex Hanna, suggested that I read a paper about spatio-temporal models of crime in Chicago. We are in the same writing group. Alex has suffered through many presentations of a paper I’m writing about crime in Chicago. Chicago? Crime? I mean these have to be related papers, right? So I gave it a quick read:

Seth R. Flaxman, Daniel B. Neill, Alex J. Smola. 2013. Correlates of homicide: New space/time interaction tests for spatiotemporal point processes. Heinz College working paper, available at: http://www.heinz.cmu.edu/faculty-and-research/research/research-details/index.aspx?rid=483

…and it’s a really great paper! Flaxman reviews three standard measures for spatial and temporal independence and then proposes a new measure that can simultaneously test for spatio-temporal dependence. The measures are validated against real crime data from Chicago. On the other hand, it’s also completely useless for my project. I mean, I stuck it in a footnote, but I can’t engage with it in a substantively meaningful way because my paper is about the Modifiable Areal Unit Problem and the good ol’ MAUP is fundamentally about polygons — not points. The MAUP occurs because a given set of points can be aggregated into any number of different polygon units, and the subsequent results of models, bivariate relationships, or even hot spot analysis might change based on the aggregation method.

This means that Flaxman’s approach and my approach are not comparable because they each rest on different assumptions about how to measure distance, social interaction, and spatial dependence. They’re based on different spatial ontologies, if you will. But back to the main argument of this post: could we play around with the models in Flaxman, the models I’m making, plus some other models in order to test some of the implications of our ideas of space? Here are some hypothetical hypotheses….

Isotropy. Isotropy means that effects are the same in every direction. For example, weather models often take into account anisotropy because of prevailing wind direction. As Flaxman mentions at the end of the paper, alternative distance measures like Manahatten distance could be used. I would take it a step further and suggest that distance could be measured across a trend surface which might control for higher crime rates on the south side of Chicago and in the near-west suburbs. Likewise, spatial regression models of polygon data can use polynomial terms to approximate trend surfaces. Do the additional controls for anisotropy improve model fit? Or change parameter estimates?

Spatial discontinuities. A neighborhood model posits — albeit implicitly and sort of wishy-washy — that there could be two locations that are very close as the crow flies, but are subject to dramatically different forces because they are in different polygons. These sharp breaks might really exist, e.g. “the bad side of the tracks”, red-lining, TIFF funding, empowerment zones, rivers, gated suburbs. Or they might not. Point process models usually assume that space is continuous, i.e. that there are no discontinuities. Playing around with alternative models might give us evidence one way or another.

Effect decay. In spatial regression models like I’m using, it’s pretty normal to operationalize spatial effects for contiguous polygons and then set the effect to zero for all higher order neighbors. As in the Flaxman paper, most point models use some sort of kernal function to create effect estimates between points within a given bandwidth. These are both pretty arbitrary choices that make spatial effects too “circular”. For exmple, think of the economic geographies of interstate exchanges in middle America. You’ll see fast food, big box retail, gas stations, car dealerships, hotesls, etc. at alomst every interchange. Certainly there is a spatial pattern here but it’s not circular and it’s not (exponentially, geometrically, or linearly) decaying across distance. Comparisons between our standard models — where decay is constrained to follow parametric forms — and semi-parametric “hot spot” analyses might tell us if our models of spatial effects are too far away from reality.

Ok. Those sound like valid research questions, so why not just do that research and publish some results? As I see it, spatial work in social sciences usually boils down to two main types of writing. First, there are the papers that aren’t terribly interested in the substantive research areas, and are more about developing statistical models or testing a bunch of different models with the same data. Here are some examples of that type:

- (Dormann et al 2007) undertake a herculean task by explicating and producing R code for no less than 13 different spatial models.

- (Hubbard et al 2010) compare GEE to mixed models of neighborhood health outcomes.

- (Tita and Greenbaum 2009) compare a spatial versus a spatio-social network as weighting matrices in spatial regression.

The problem with this approach is that the data is often old, simplified data from well-known example datasets. Or worst yet, it is simulated data with none of the usual problems of missing data, measurement error, and outliers. At best, these papers use over simplified models. For example, there aren’t any control variables for crime even when there is a giant body of literature about the socio-cultural correlates of spatial crime patterns (Flaxman and I are both guilty of this).

The second type of research would be just the opposite: interested in the substantive conclusions and disinterested in the vagaries of spatial models. They might compare hierchical or logistic regressions to the spatial regressions, but very rarely go in depth about all the possible ways of operationalizing the spatial processes they’re studying. And when you think about it, you can’t blame them because journal editors like to see logical arguments for the model assumptions used in a paper – not an admission that we don’t know anything about the process under study and a bunch of different models all with slightly different operationalizations of the spatial process. But here’s the thing: we don’t actually know very much about the spatial processes at work! And we have absolutely no evidence that the spatial processes for, say, crime are also useful in other domains like educational outcomes, voting behavior, factory siting, human pathogens, or communication networks.

Thus, we don’t need more social science papers that do spatial models. We need (many) more social science papers that do multiple, incongruent spatial models on the same substantively rich datasets. I think it’s only by modeling, for example, crime as an isotropic point process, a social network with spatial distance between nodes, and a series of discrete neighborhood polygons can we start to grasp if one set of assumptions about space is more/less accurate and more/less useful. In case you couldn’t tell, I’m a big fan of George Box’s famous quote. This is the slightly longer version:

“Remember that all models are wrong; the practical question is how wrong do they have to be to not be useful.” (Box & Draper 1987, 74)

Good luck, and go play!

[Update, 2013-07-22: I changed the citation to the Flaxman paper, as it is now a working paper in his department at Carneige Mellon University.]